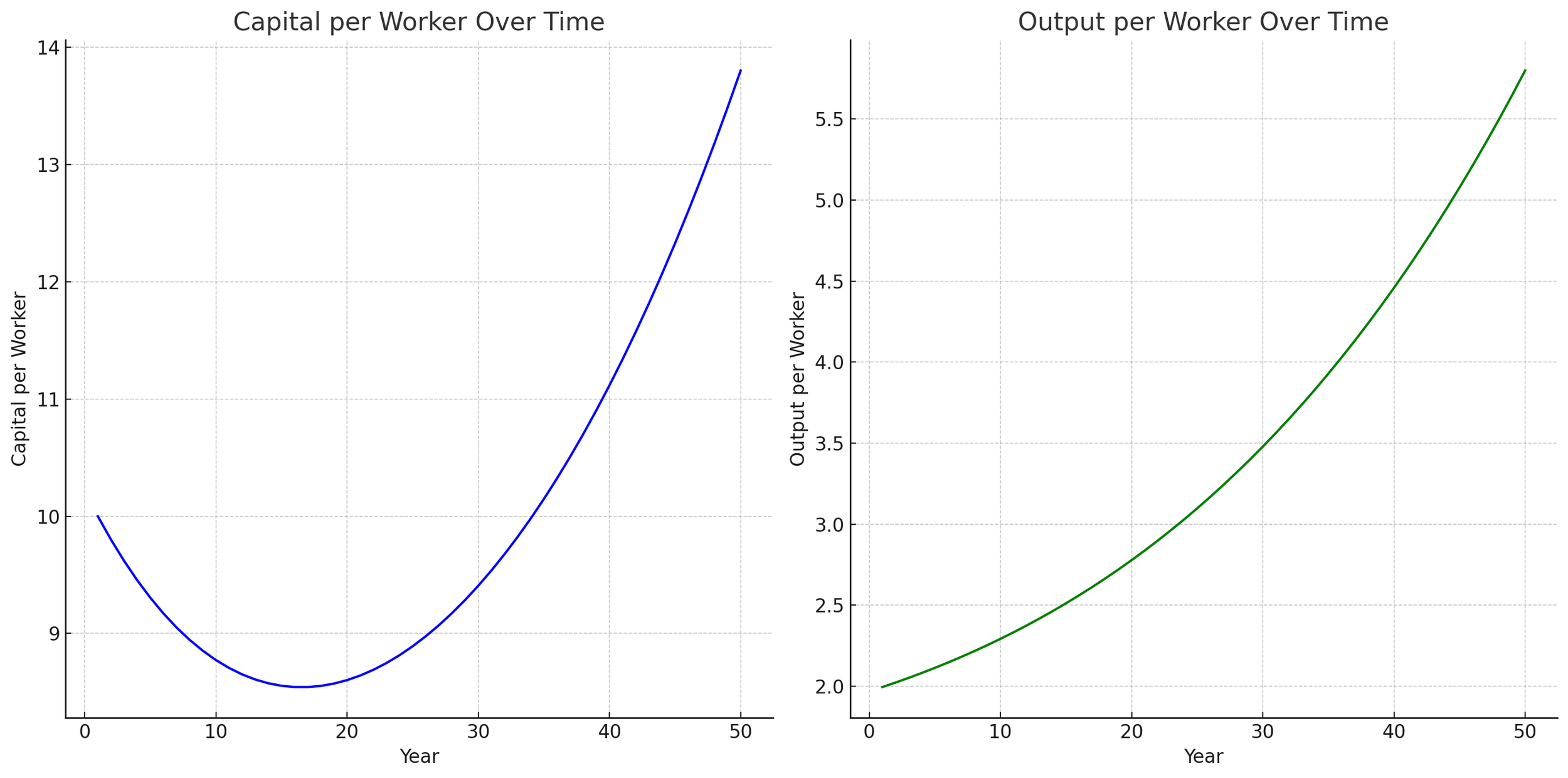

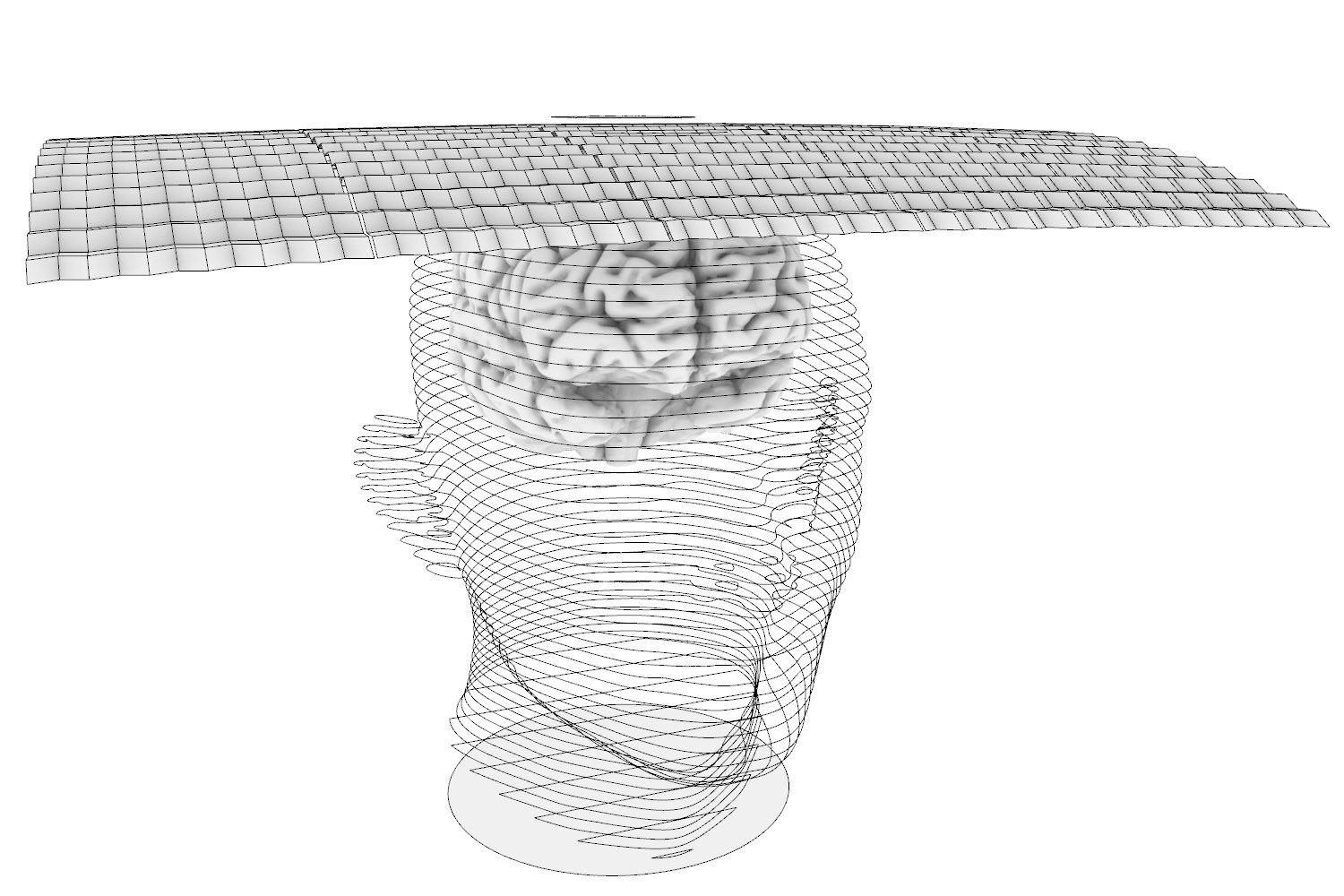

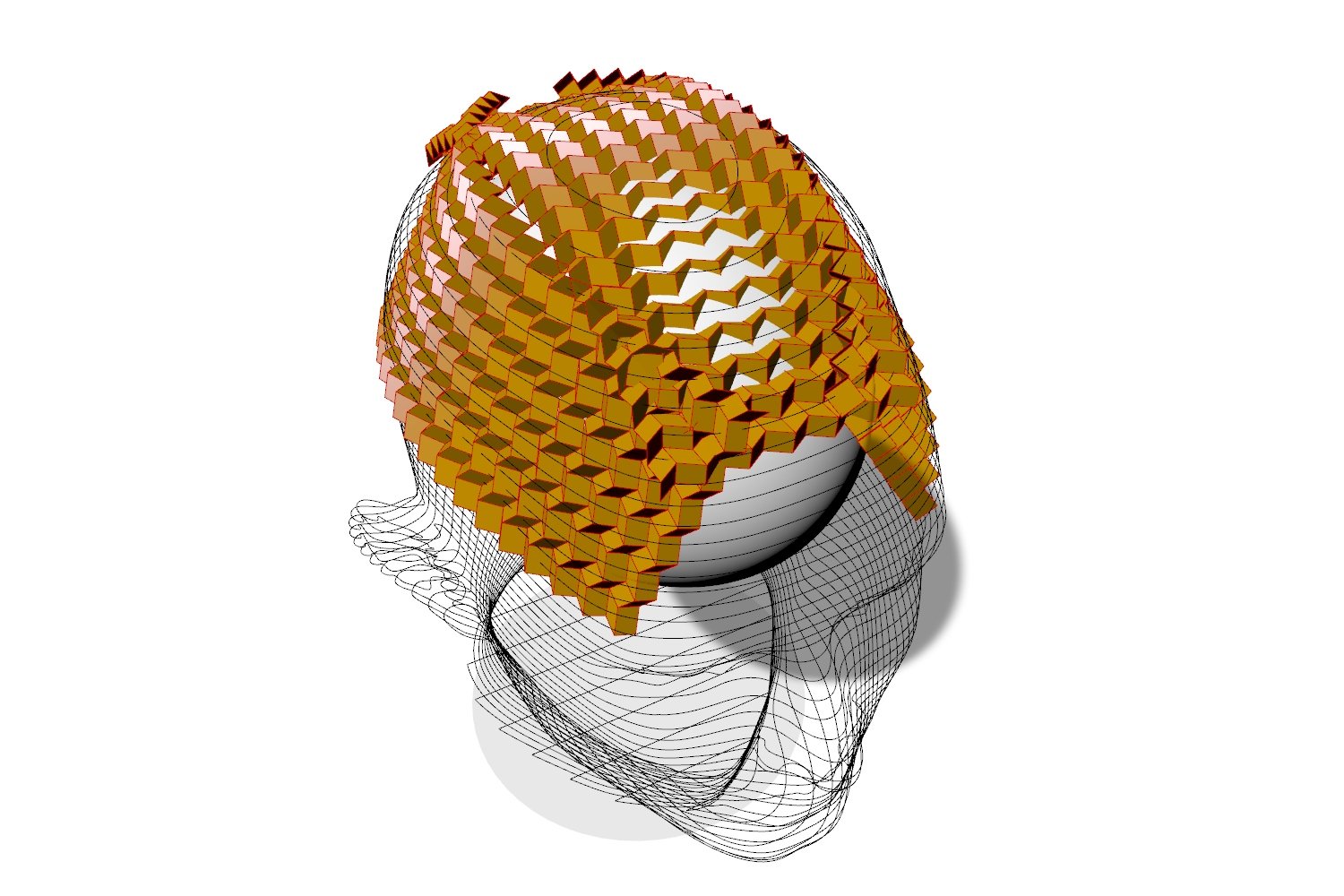

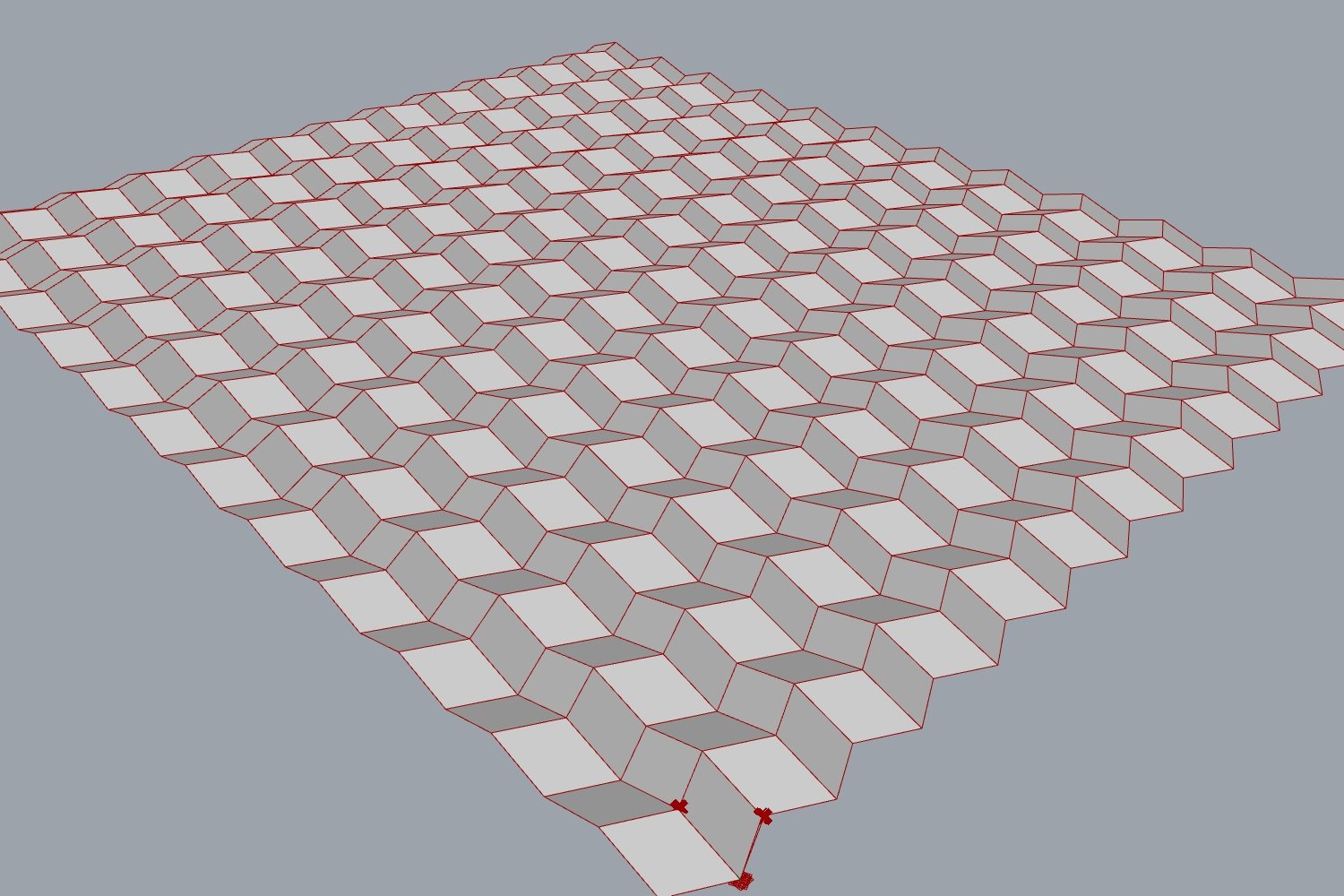

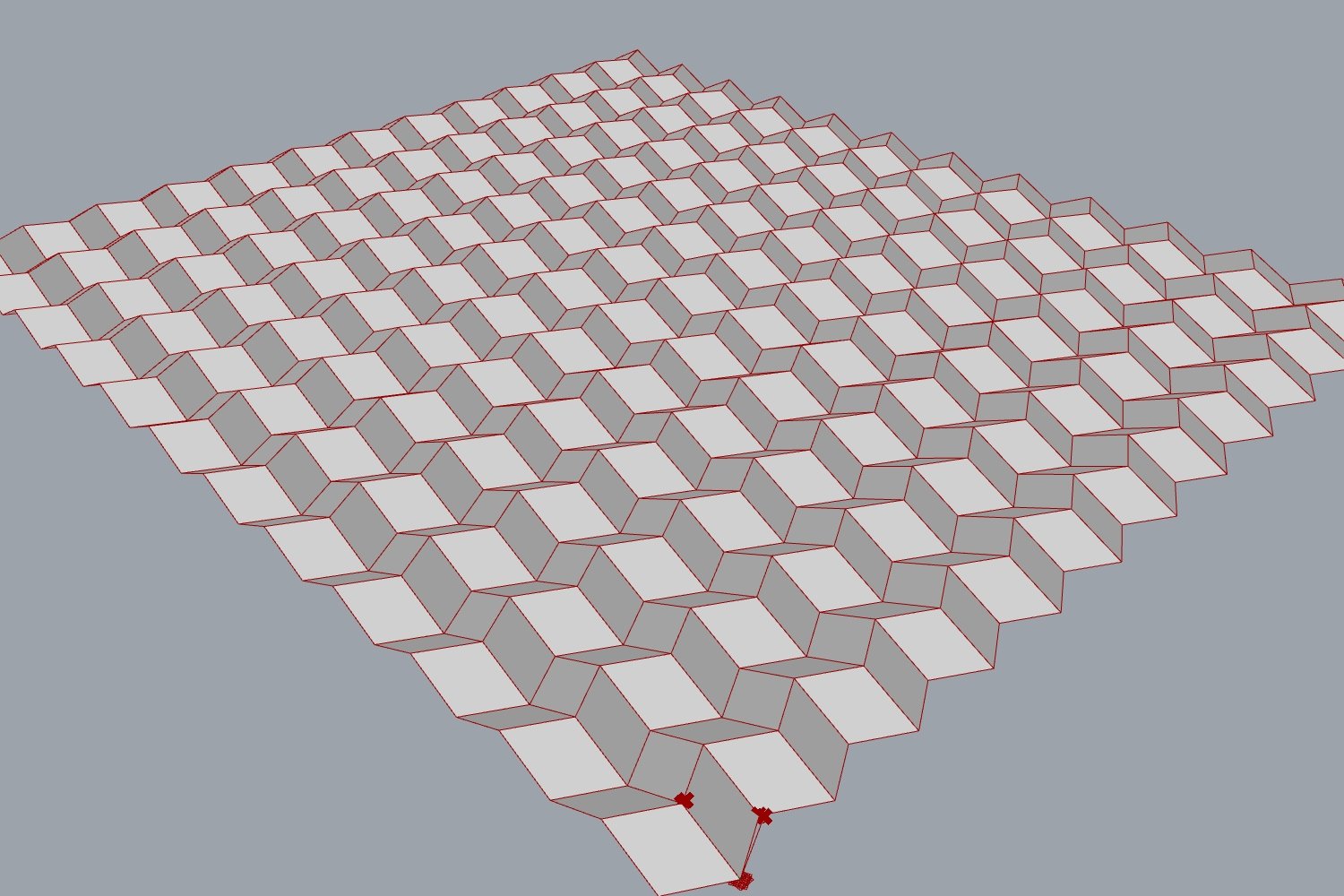

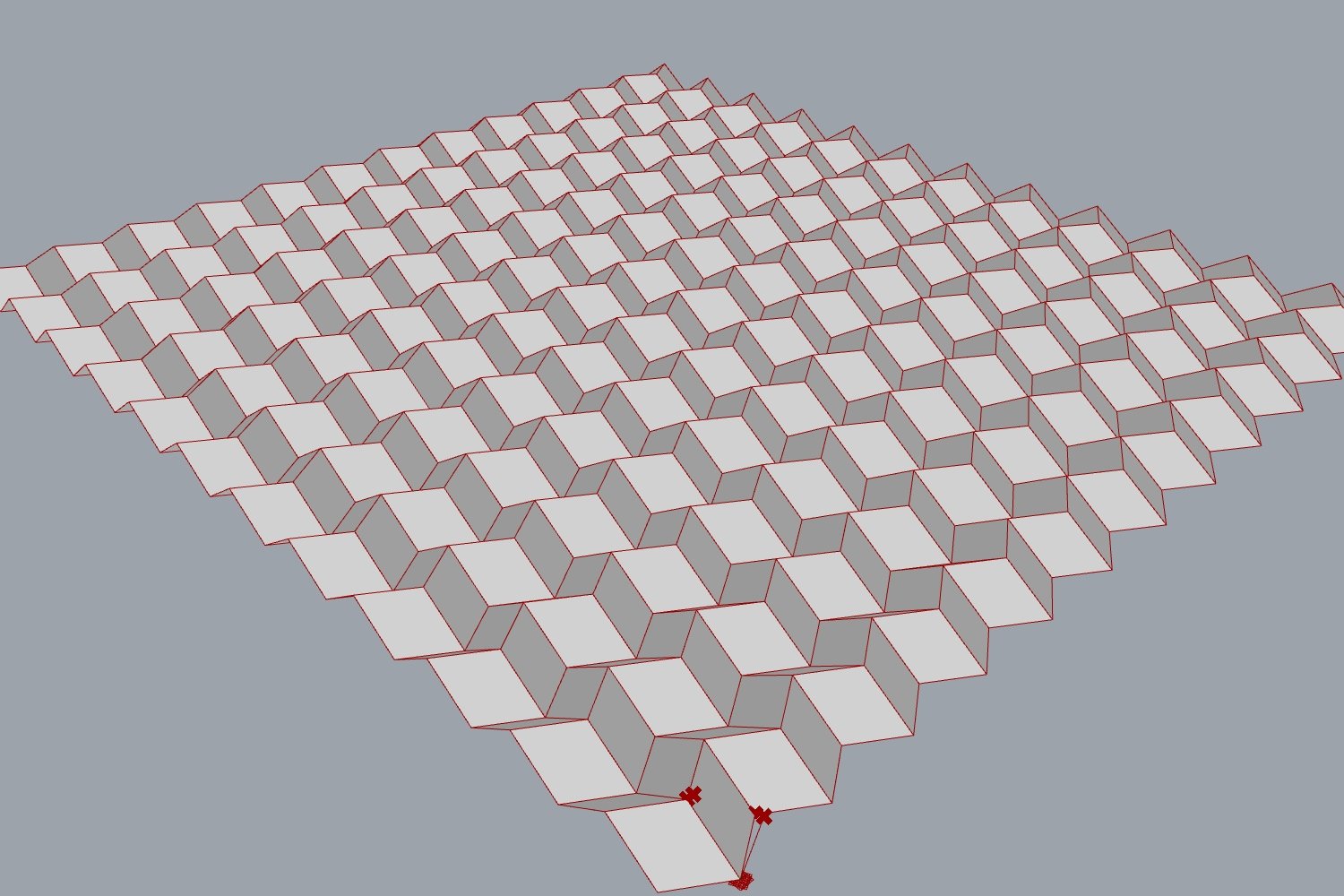

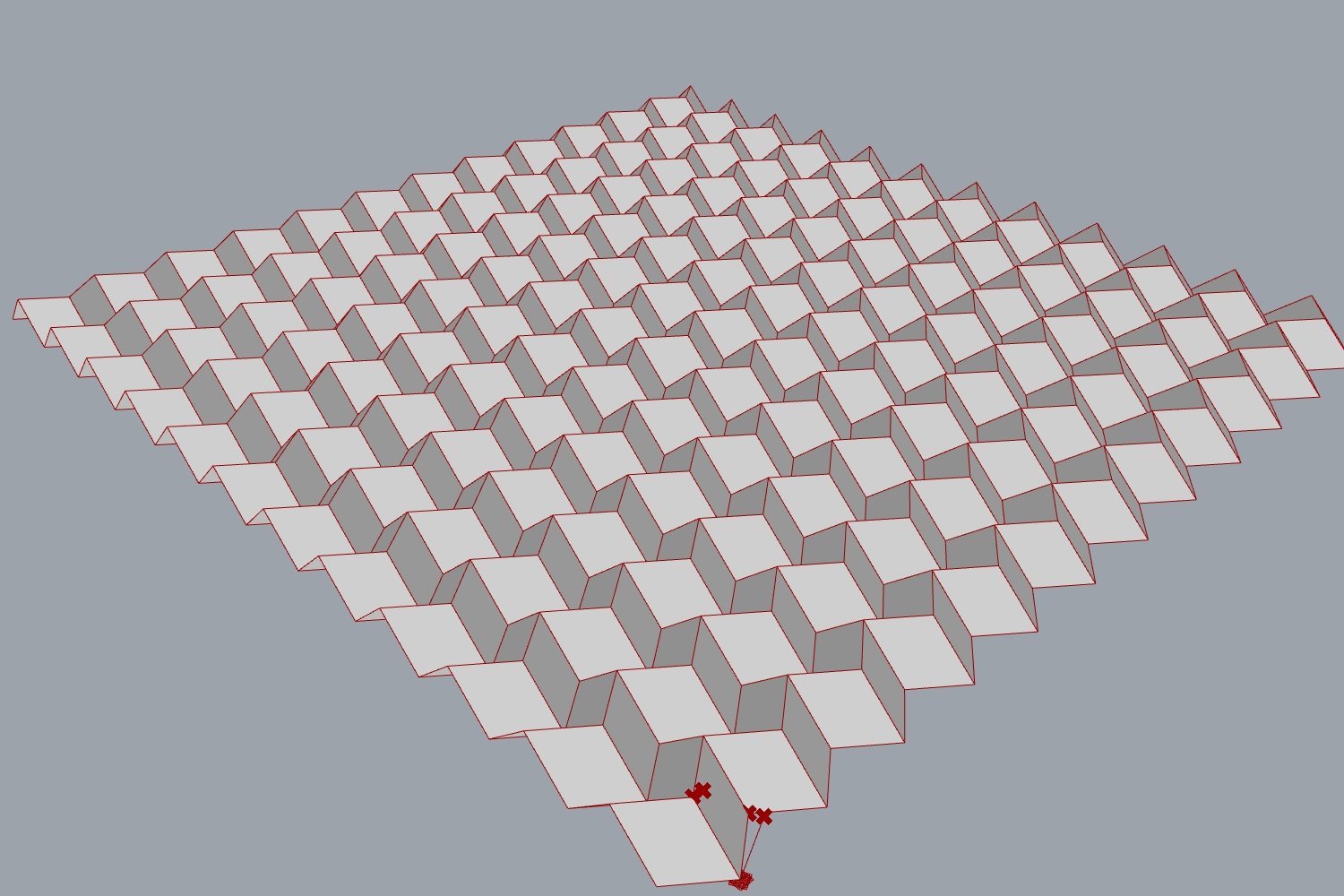

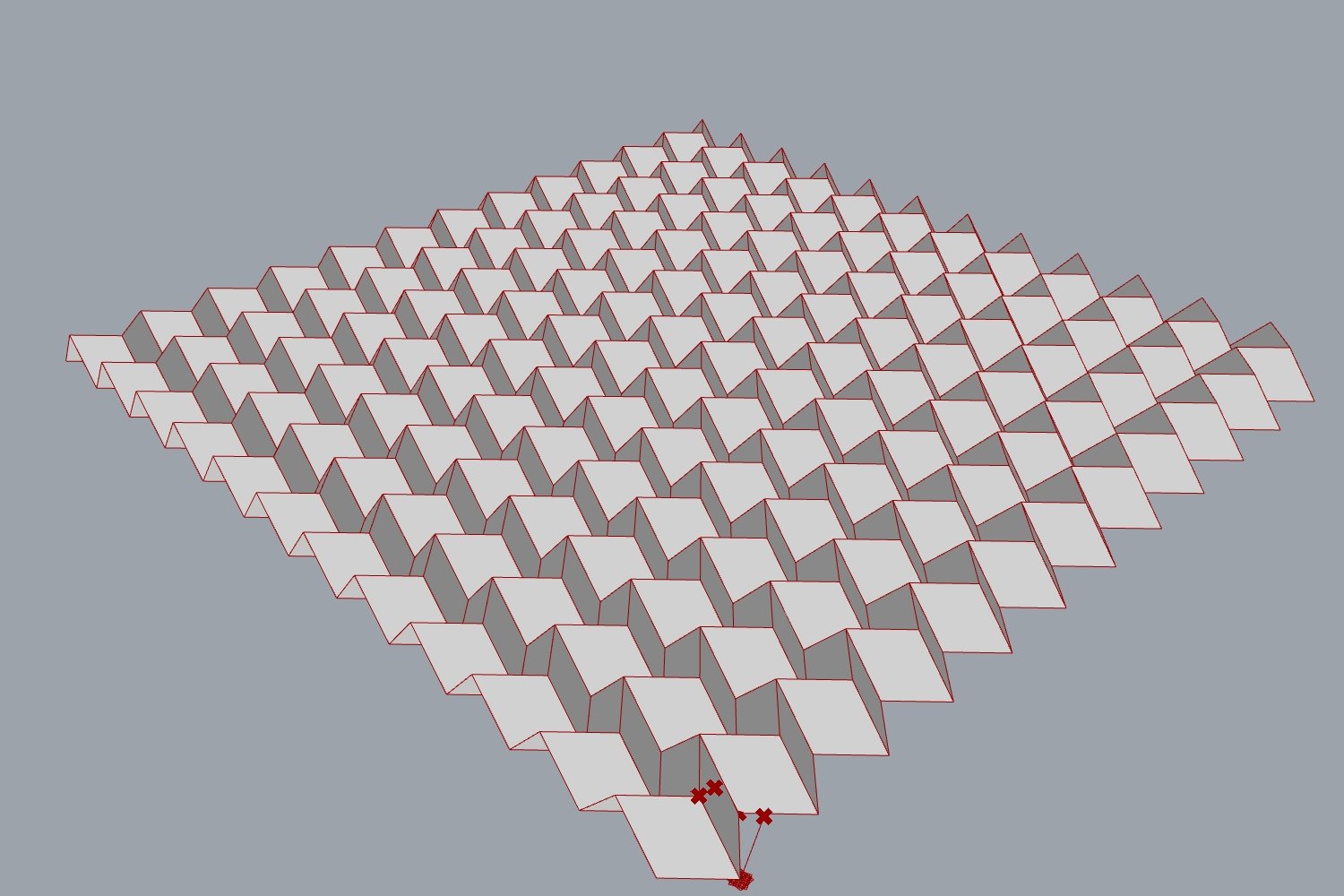

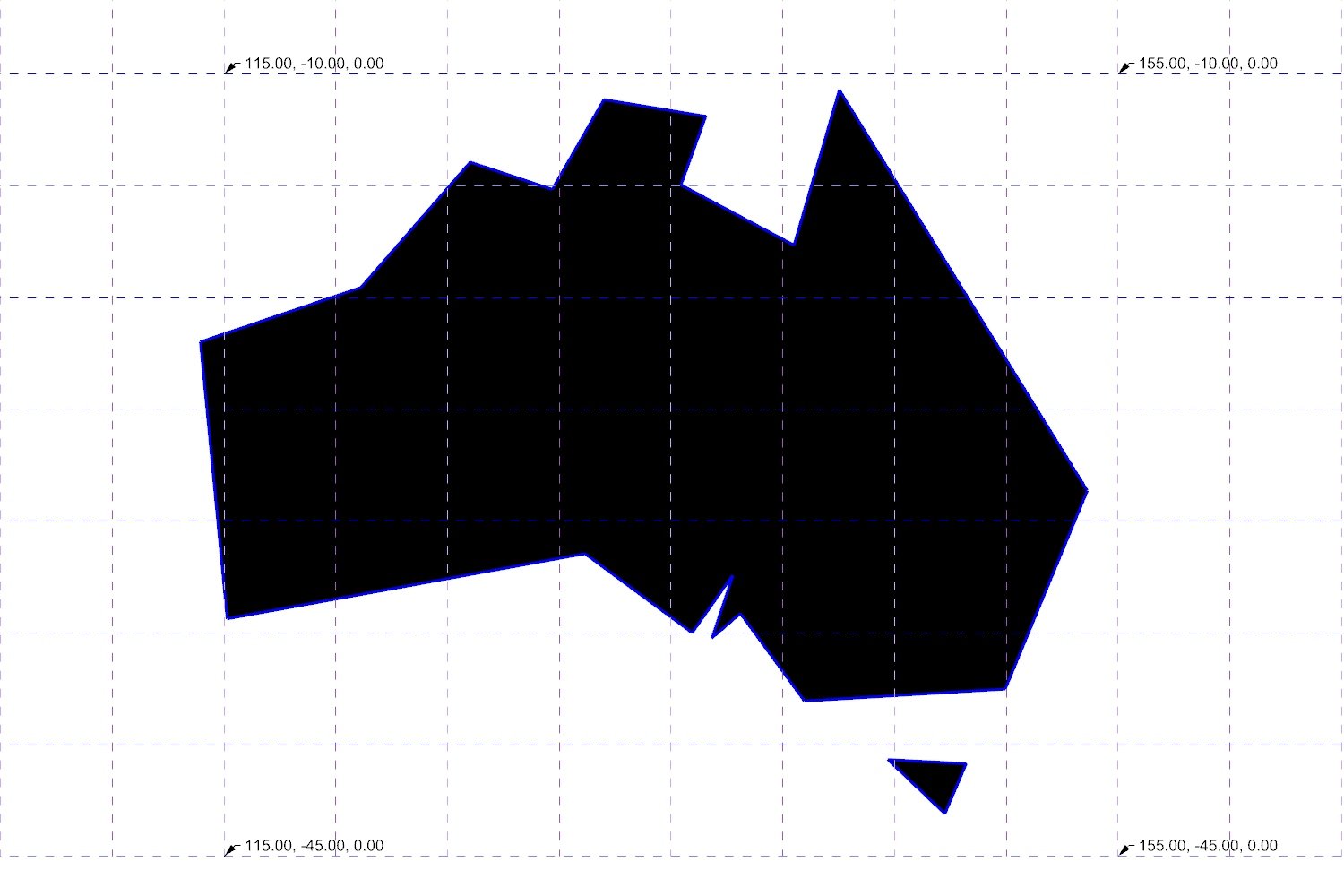

Nudel then asked ChatGPT to output plots from the Excel files resulting in the graphs above. The hockey-stick curve shown is distinctly different to the relationship expected of the Solow-Swan model whereby marginal gains in capital per capita decrease as an economy matures.

ChatGPT explained the results of its outputs from Excel as follows:

The appearance of a "hockey stick" relationship in the graph of capital per capita over time can initially seem counterintuitive given the principle of diminishing returns to capital. However, this visual trend can be explained by several factors related to the parameters and dynamics of the Solow-Swan model:

1. Accumulation Phase: Early in the development process, significant increases in capital per worker can occur because the economy is starting from a lower base of capital. This phase often shows rapid growth as initial investments yield high returns due to relatively scarce capital.

2. Compound Growth: Even with diminishing returns to capital, as long as the savings rate remains constant and above the combined rates of population growth and depreciation, the economy can still accumulate capital. The model incorporates reinvestment of a portion of output, which compounds over time, leading to a rapid increase in capital stock initially.

3. Technological Growth: The model you've seen also includes technological progress, which enhances the productivity of both capital and labor. Technological growth can offset some of the diminishing returns to capital by effectively increasing the output generated from existing levels of capital and labor.

4. Transition to Steady State: Over a longer period, the economy should approach a steady state where the rate of capital accumulation aligns more closely with the rates of population growth and depreciation. In the steady state, increases in capital per worker taper off, and any further growth in output per worker is primarily due to technological advancements rather than capital deepening.

The "hockey stick" growth might be more pronounced depending on the initial conditions (like a very low initial capital per worker) and the specific parameter values for savings, depreciation, population growth, and technological progress. If the model's assumptions were altered (e.g., a lower savings rate or higher depreciation), the curve might flatten earlier or rise less steeply. This shape underscores how sensitive the model's outcomes can be to its input parameters.